A popular type of artificial intelligence (AI) today called multimodal generative artificial intelligence, represents a computer program generating things that look like humans made them. There are a lot of things that it could do. Such as having conversations as if there was a person like you on the other side of the screen, writing program code, creating images and videos from scratch by description.

If we take a look at where multimodal generative AI models are already being applied, we can name a large number of industries and the most improbable projects - from education and medicine to big data processing and predictive analytics.

In our article we'll take a comprehensive overview at its core competencies: capabilities, core technologies behind it, and why it can be useful for your business.

What is multimodal generative AI

Definition of multimodal AI

Generative AI, also known as GenAI is one of the fastest growing branches of artificial intelligence that creates things that used to take tons of time to produce. Most notably, these include forms of content: textual, audio, and visual.

Multimodal generative AI models use datasets as a basis for learning, but don�’t simply combine them according to the query, but actually create from scratch. Therein lies the main difference from traditional AI, which analyzes the differences between various types of multi modal data.

Here's a basic example. If one asks a traditional AI a question like "Is there a person walking on the road or a car driving by?", it is most likely to respond accurately and unambiguously.

On the other hand, a generative AI can be asked to draw a picture of a person walking on one road and being overtaken by a car. It will cope with this task no less successfully - the result will be a drawing, not a text. Thus, multimodal generative AI creates new content based on what it has learned from previously generated content, and this happens in the process of continuous AI learning.

ChatGPT is also one of the multimodal AI examples, and in its basic version is available to a wide range of users.

How generative AI integrates with multimodal data

Multimodal AI is a subset of artificial intelligence that integrates information from various modalities, such as text, images, audio, and video, to build more accurate and comprehensive multimodal generative AI models. So here are some of the ways it does it:

- Generating images by text;

- Generating text by images;

- Generating captions associated with images;

- Generating captions or stories related to visual content;

- Searching for visually similar images based on a given text description.

Key components of multimodal generative AI systems

| Component | Description | Approach | Hurdles |

|---|---|---|---|

| Encoders | Transforms input data from different modalities (text, images, audio, etc.) into a common representation. |

|

|

| Multimodal fusion | Combines the encoded representations from different modalities into a unified representation. |

|

|

| Decoder | Generates the output content (text, images, etc.) from the fused multimodal representation. |

|

|

| Training data | Large and diverse datasets with paired multimodal AI examples of different modalities (e.g., images with captions). |

|

|

| Loss functions | Optimize the model's performance across different tasks and modalities (reconstruction, adversarial, cross-modal consistency). |

|

|

| Architectural choices | Neural network architectures like transformers, CNNs, RNNs that impact performance and capabilities. |

|

|

| Inference and generation | Generates the desired output using techniques like beam search, top-k sampling, temperature-based sampling. |

|

|

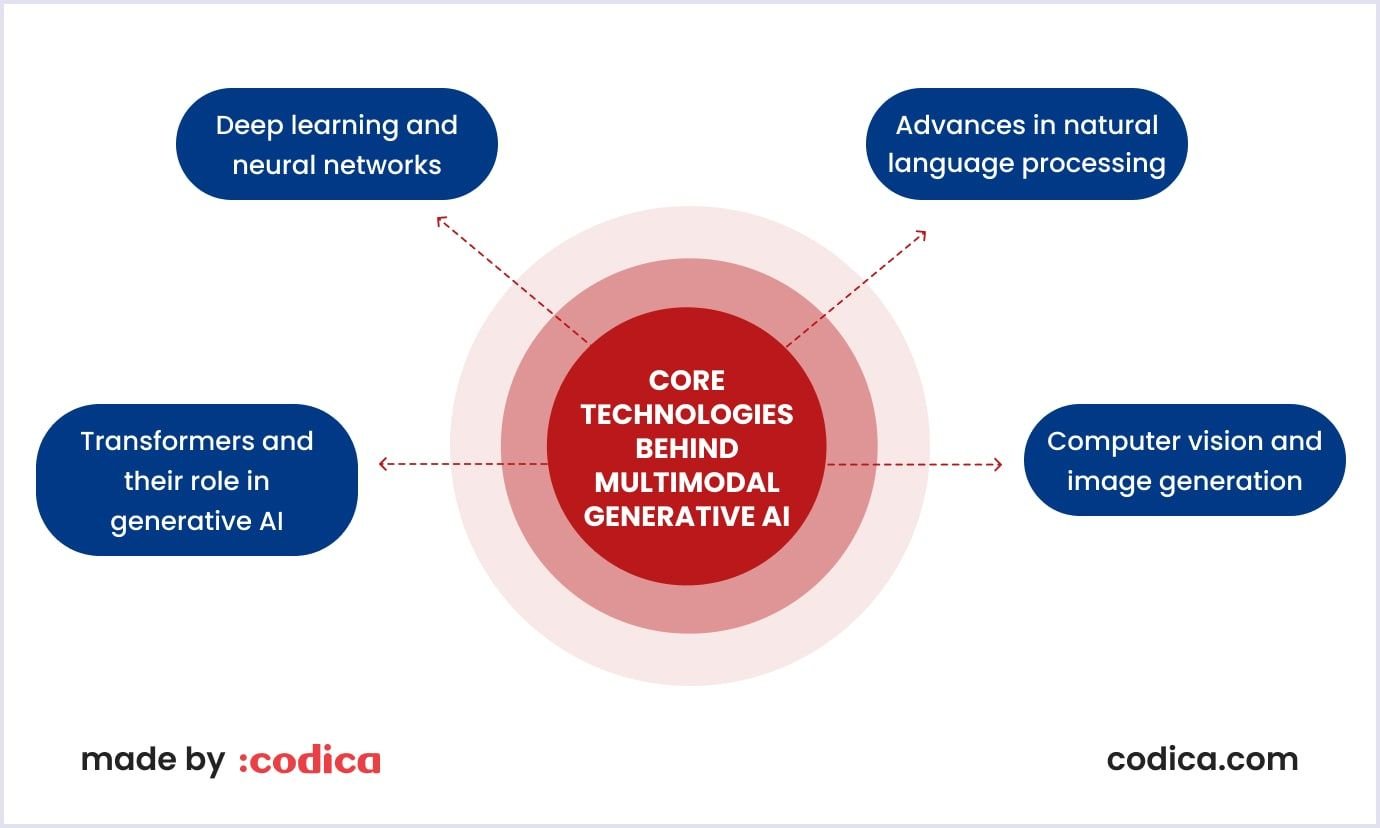

Core technologies behind multimodal generative AI

Deep learning and neural networks

The cornerstone of multimodal generative AI is deep learning. It's a type of machine learning that imitates the way the human brain works in processing data and creating patterns for decision-making. Deep learning models use sophisticated architectures popularly known as artificial neural networks. Such networks include multiple interconnected layers that process and transmit information, mimicking neurons in the human brain.

Transformers and their role in generative AI

The transformer-based multimodal generative AI models build on the concept of the encoder and decoder in a variational autoencoder (VAE). Transformer-based models add new layers to the encoder to improve performance on text-based tasks, including comprehension, translation, and creative writing.

Transformer-based models utilize a self-awareness mechanism. They evaluate the importance of different parts of an input sequence when processing each element of that sequence.

Advances in natural language processing (NLP)

Multimodal generative AI relies heavily on advancements in NLP, which have enabled machines to understand, interpret, and generate human-like text. Key developments include the use of transformer-based models, like BERT and GPT, that can capture contextual information and generate coherent and natural-sounding language.

Computer vision and image generation techniques

Multimodal generative AI also leverages breakthroughs in computer vision and image generation. Techniques like convolutional neural networks (CNNs) have enabled machines to analyze and understand visual information with increasing accuracy.

Additionally, the development of generative adversarial networks (GANs) and variational autoencoders (VAEs) has made it possible to generate high-quality, realistic-looking images from scratch or based on textual descriptions.

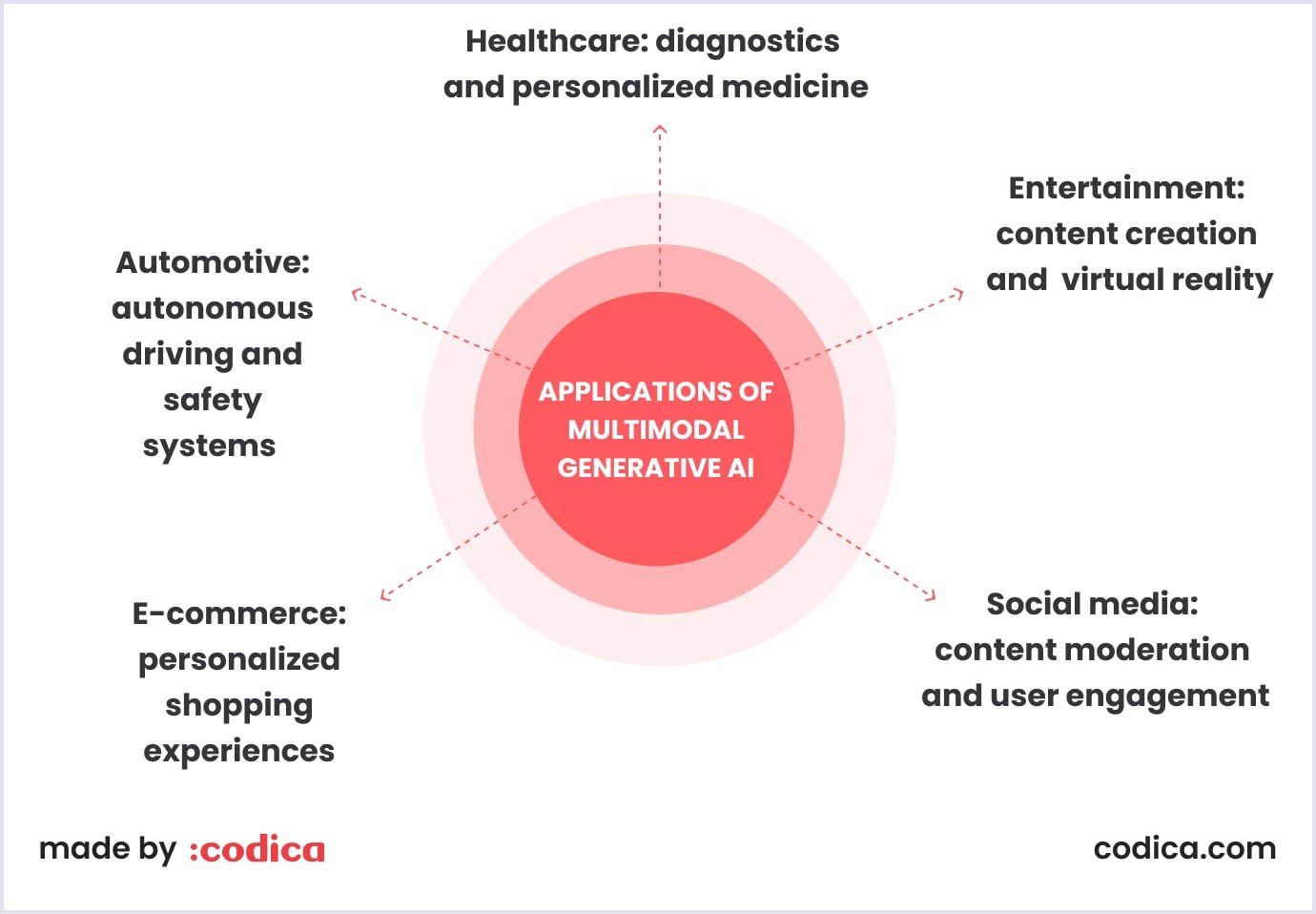

Applications of multimodal generative AI

In the long term, generative multimodal AI will be applied in almost every industry, but we can already identify several priority areas where its use will have the greatest impact and benefit.

Healthcare: diagnostics and personalized medicine

Today, the healthcare industry has become one of the main platforms for the practical implementation of AI technologies. The market volume of AI solutions for healthcare today is approximately $28 billion. It is expected to grow at a compound annual growth rate of 37% over the next few years, reaching nearly $188 billion by 2030.

Artificial intelligence algorithms are opening up new possibilities for personalizing prevention and treatment. Such tools can analyze a patient's medical records, medical history, genetic information, and lifestyle factors to predict disease risks and tailor treatment options.

Such personalization can be essential in therapy. For example, algorithms can help doctors select the best chemotherapy drugs for a cancer patient, taking into account his or her genetic information. AI can also determine the optimal dose of a drug or radiotherapy for a patient based on a comprehensive analysis of their physical condition and medical history.

Automotive: autonomous driving and safety systems

Multimodal generative AI plays an important role in safety, especially in the development of autonomous vehicles. When training an autonomous driving system, countless driving situations, and therefore dangerous and exceptional scenarios, are required.

Generative AI can create realistic simulations to improve the safety of autonomous vehicle control algorithms before they are put into practice on public roads. Generative AI can also model solutions to current problems in real time, aiding autonomous vehicle decision making. It can provide relevant predictions of road user behavior and inform them to improve user and pedestrian safety. Generative multimodal AI can manage complex driving scenarios, making them safer and smoother.

Although this use of multimodal AI provides significant improvements for autonomous vehicles, they still have limitations. Big companies, like Tesla, Amazon, and Mercedes-Benz still work on improving self-driving technologies to ensure road safety. So, human help is still needed.

E-commerce: personalized shopping experiences

Online commerce has long relied on a targeted and personalized approach, where the user experience is tailored to the needs of the customer. Targeted advertising on social networks, algorithms that select offers based on search history - these are all examples of personalization in e-commerce.

Artificial intelligence is opening up a new phase in personalisation tools development. Machine learning algorithms make it possible to process huge amounts of data in real time, allowing online stores to develop a sales strategy not just for individual categories of consumers, but also for each individual user. This approach is called hyper-personalization: it allows you to find an individual approach to literally every customer, providing the most relevant and timely offers.

Entertainment: content creation and virtual reality

One of the most obvious applications of AI for online commerce is the creation of text, image, audio, and video content. These skills can be used in a variety of ways: in the creation of marketing materials, product descriptions, loans, catalogue websites, etc.

Multimodal generative AI such as ChatGPT can be used for blogging and social networking. Many brands already run social media sites using generative AI. But the possibilities of generative AI don't stop there. It can be used for design, visualization of offers, etc. Talking about the multimodal AI examples of implementation, Jewelry brand J'evar can be a good example. Company permanently uses AI to design jewelry. J’evar claims that multimodal generative ai models save its designers a lot of time.

Social media: content moderation and user engagement

With the advent of AI technologies, social media gives us insights that would never have been available to any one person or group of people. There are many artificial intelligence tools on the market to help with social media management, content creation, analytics, advertising, and more.

AI could take over many time-consuming and monotonous social media tasks, freeing up teams to focus on something else. This time could then be used to interact organically with customers, plan multimedia campaigns or work on larger projects.

Read also: The Role of Generative AI in Marketplace Development

Benefits and challenges of multimodal generative AI

Once the theory is covered, let's outline GenAI's ups and downs, beginning with the first.

| Enhanced data understanding and context | A fundamental advantage of multimodal models is their ability to better understand the context and nuances of tasks. For example, such a model can not only recognise an object in an image, but also describe it with text, translate text into an audio file with a synthesized voice, or create a video based on a text query. |

|---|---|

| Improved accuracy and performance | The integration of diverse data sources and modalities can lead to more robust and accurate AI models, as the system can leverage complementary information to make more informed predictions and decisions. |

| Greater adaptability and flexibility | Multimodal AI systems can be more adaptable and flexible to changing environments, user preferences, or task requirements, as they can dynamically adjust their input and output modalities based on the specific context. |

| Potential for innovation in various industries | The enhanced capabilities of multimodal AI can unlock new opportunities for innovation and disruptive applications across a wide range of industries, from entertainment and education to healthcare and manufacturing. |

Regardless of the benefits, Multimodal GenAI has its operations challenges.

| Data quality and integration issues | Multimodal AI models require high-quality, diverse, and well-annotated datasets spanning multiple modalities, which can be technically challenging to collect, curate, and integrate due to the varied nature of the data sources and formats. |

|---|---|

| Computational resource requirements | Multimodal AI models tend to be highly complex, with substantial memory and processing power requirements that often necessitate specialized hardware like powerful GPUs or distributed computing clusters. |

| Security and privacy concerns | Privacy and data protection also require attention, given the sheer volume of personal data that multimodal AI systems can handle. Questions may arise about data ownership, consent to data transfer, and protection from misuse in an environment where humans cannot fully control the output of AI. |

Best practices for implementing multimodal generative AI

AI is gaining prominence in today's businesses in the rapidly growing world of technology. Integrating AI into business processes is now becoming a key step to improve the productivity and marketability of companies.

Steps to integrate multimodal AI in projects

Several steps should be followed for successful integration of multimodal AI.

Step 1: Setting goals and collecting data

At first, clearly define all the business problems you want to solve with the use of multimodal AI. It may be anything: improving user interaction, automating business processes, improving recognition accuracy, etc. After determining your objectives, work out what types of data you'll be using in your project: images, text, speech, video, etc. Afterwards, collect these needed data sets, and do pre-processing (cleaning) to improve their quality.

Step 2: Selecting and educating the model

Review the available multimodal software architectures (e.g., transformers, convolutional neural networks, recurrent networks) and select the most appropriate one for your task. Afterwards, develop a software prototype of the multimodal model using suitable frameworks and libraries (e.g. PyTorch, TensorFlow). Train the model on the collected data using best practice training techniques. Assess model performance on test data and iterate the model further to improve accuracy.

Step 3: Integrating into the system

If it is planned to integrate multimodal generative AI into an existing system or application, you will need to develop APIs or modules for integration. Following this, test the performance of the integrated system in real conditions, and then evaluate its key indicators: productivity, response time, and reliability.

Step 4: Permanent optimization and improvement

Obviously, feedback from customers is hugely important, so it would need to be collected. Having analyzed the results of using the multimodal system, pinpoint areas for improvement: greater accuracy, speed, usability, etc. Implement necessary changes to the model if required. And keep actively upgrading the multimodal system based on the ongoing feedback from users.

Tools and frameworks commonly used

| Tool/Framework | Description | Strengths | Use cases |

|---|---|---|---|

| OpenAI DALL-E | Transformer-based model that generates images from text descriptions. | High-quality text-to-image generation, versatile use cases. | Creative content generation, product visualization, image editing. |

| Google Images | Diffusion-based model for text-to-image generation. | Scalable, high-fidelity image generation, open-source. | Creative applications, product design, educational content. |

| Stable Diffusion | Open-source text-to-image diffusion model. | Highly customizable, open-source, efficient. | Creative content generation, image editing, artistic applications. |

| Midjourney | AI-powered text-to-image generation tool. | Intuitive user interface, wide range of creative applications. | Creative content generation, product visualization, art creation. |

| PaLM | Pretrained large language model from Google that can be fine-tuned for multimodal tasks. | Natural language understanding, image captioning, visual question answering. | Strong natural language capabilities, can be fine-tuned for multimodal tasks. |

| VisualGPT | GPT-based model for generating images from text. | Leverages the power of GPT language models, can generate diverse images. | Creative content generation, product design, educational materials. |

| Multimodal Transformers | Models like ViLT, UNITER, and LXMERT that jointly process text and vision inputs. | Capture cross-modal interactions and learn joint representations. | Visual question answering, image captioning, multimodal reasoning. |

Recommendations for businesses and developers

- Start small and iterate: Begin with a focused use case and gradually expand the capabilities of your multimodal AI system. This allows you to learn from early deployments and improve the system iteratively.

- Prioritize user experience: Design the AI interface and interactions with the end-user in mind. Ensure the multimodal experience is intuitive, engaging, and adds value to the user.

- Diversify the training data: Collect a diverse set of training data that represents different demographics, perspectives, and use cases. This helps the AI system be more inclusive and unbiased.

- Invest in explainability: Incorporate explainable AI techniques to help users understand the reasoning behind the AI's outputs. This builds trust and transparency.

- Stay informed of advancements: Keep track of the latest research and industry developments in multimodal AI. Regularly assess your system's capabilities and identify opportunities for enhancement.

- Foster interdisciplinary collaboration: Bring together experts from various domains, such as machine learning, user experience, ethics, and domain-specific fields, to collaborate on the development and deployment of multimodal AI.

Codica's AI development services include facilitating interdisciplinary teamwork across disciplines like machine learning, user experience, and domain-specific fields.

Future trends and predictions

As generative AI models continue to evolve and shape our world, people, organizations, and governments must adapt and prepare for the far-reaching implications of these technologies.

Appearing technologies and research directions

Generative AI is expected to make significant strides, with new architectures like transformer-based models and diffusion models enabling more versatile and robust synthetic content generation. Researchers will also focus on few-shot learning and common sense reasoning to build AI systems that can adapt quickly and exhibit more intelligent behavior.

Potential advancements in multimodal generative AI

The convergence of language, vision, and audio in generative AI models will accelerate, transforming content creation, virtual assistance, and human-AI collaboration. These multimodal systems will be able to generate and manipulate contextually relevant combinations of text, images, and audio, blurring the lines between the digital and physical worlds.

Long-term impact on different industries

Businesses and institutions should continuously monitor AI developments and explore how generative AI models can be used to optimize their operations, innovation and decision-making. This may require investing in AI research and development, integrating AI-based tools into existing workflows, and redefining job roles to support human-AI collaboration.

Vision for the next decade in AI development

As generative AI becomes more widespread, it will revolutionize industries and unlock unprecedented human creativity. Continued advancements in areas like few-shot learning and causal reasoning will be crucial to harnessing the transformative power of these technologies for the betterment of society. Ensuring the responsible and ethical development of safe, reliable generative AI systems will be paramount as they become increasingly capable and autonomous.

How Codica can help with multimodal generative AI

Our artificial intelligence development services assist businesses in automating business operations and workflows and making informed decisions. By hiring our Al Developers, the clientele has augmented their business speed, personalized customer experiences, improved monitoring, and diminished human error leading to reduced cost and increased productivity.

Check out our portfolio highlighting successful web projects that help our clients thrive.

Conclusion

Multimodal AI brings AI to new heights, allowing for richer and deeper insights than were previously possible. However, no matter how "smart" AI becomes, it can never replace the human mind with its multifaceted knowledge, intuition, experience, and reasoning.

If you have a project idea that requires AI, contact us. Our experts will help you plan, implement, and support your solution.