Nowadays, creating software products without thorough preplanning is virtually impossible due to its complexity. One crucial part of this planning is the technical stack you will be using to build the product.

In the majority of products, the tech stack involves several or even a dozen tools, programming languages, and frameworks. Besides, it becomes even more complicated once AI is introduced to the software, as your tech stack has to account for it. So, in this piece, we’ll break down the AI tech stack, explaining everything there is to it.

What is an AI tech stack?

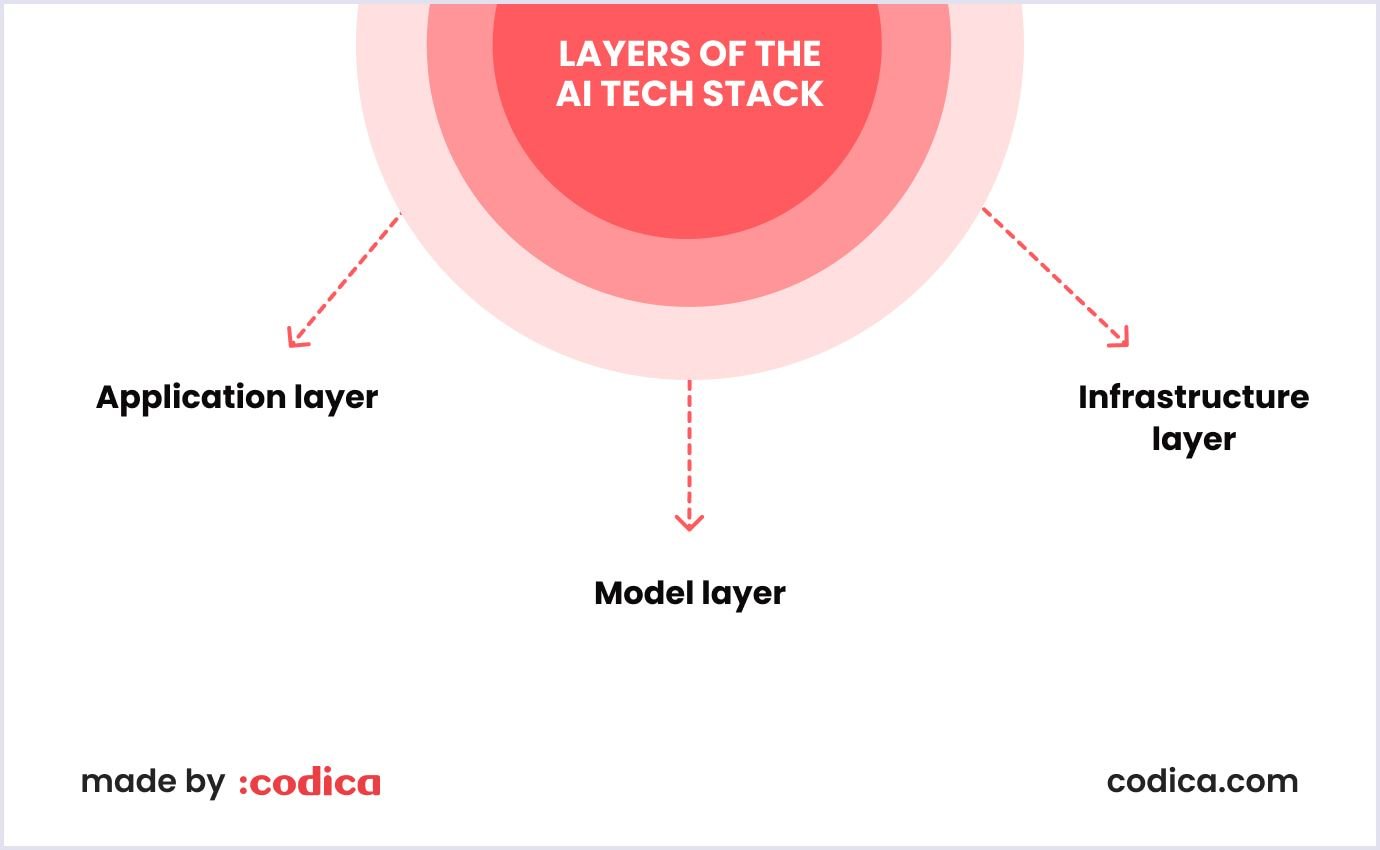

Basically, an AI tech stack is merely a huge set of tools and frameworks used to create, deploy, and maintain AI systems. Almost like a traditional technology stack, an AI tech stack includes various layers. Each of them serves a specific purpose and combines with others to create a functional system. For example, these layers often include data processing, model development, deployment infrastructure, and more.

The benefits of the AI tech stack are all about its capabilities to boost the AI development process. With a thoughtfully chosen stack, you can maintain consistency, scalability, and efficiency in your AI initiatives. Moreover, it can help your team to avoid the pitfalls of integrating disparate tools and technologies. In simpler terms, a robust AI tech stack is a foundation for building supreme AI applications.

Layers of the AI tech stack

Application layer

To start with, the application layer is the topmost layer of the AI tech stack. It is here that users interact with your AI-powered app. This layer includes various tools, frameworks, and interfaces to let devs introduce AI models to user-facing applications seamlessly. Therefore, the main idea of this layer is to deliver a seamless UX by exploiting everything the underlying AI models have to offer.

For more context, here are the most fundamental elements of this layer:

- User interfaces (UI). Various interfaces like desktop, mobile, and web apps that let users interact with AI functionalities.

- API gateways. Middleware that connects the application to the AI models, allowing for easy integration and communication between different components.

- Frontend frameworks. To build responsive and interactive user interfaces, technologies like Angular, React and Vue.js are all great choices.

- Backend services. Server-side components that manage business logic, handle user requests, and process data before it is sent to AI models.

So, why is this layer so important? For starters, it provides user accessibility, i.e., ensures that AI capabilities are accessible and easy to use for end-users. Secondly, it allows the product to be scalable and grow efficiently according to the demand. Lastly, it is customizable. Thanks to its flexibility, developers can adjust and modify the application layer to customize AI functionalities based on ever-changing needs and business goals.

Model layer

The model layer is the core of the AI tech stack, where AI models are developed, trained, and optimized. This layer involves various frameworks, libraries, and tools that data scientists and machine learning engineers use to create effective AI models.

The model layer’s fundamental parts include the following:

- Development frameworks. Tools such as TensorFlow, PyTorch, and Keras provide pre-built functions and algorithms for model development.

- Training environments. Platforms that support the training of models using large datasets, often leveraging GPUs and TPUs for accelerated processing.

- Hyperparameter tuning. Techniques and tools like Optuna and Hyperopt optimize model performance by fine-tuning hyperparameters.

- Model evaluation. These techniques assist in calculating the accuracy, precision, recall, and other performance indicators of AI models.

After all, the model layer is no less significant than the two others. It focuses on creating and delivering models with both outstanding performance and accuracy in different tasks.

Besides, it always supports the iterative process of testing and playing with various algorithms, hyperparameters, and architectures to improve model outcomes. Lastly, thanks to the model layer, the models can be reproduced and validated by maintaining consistent development and training processes.

Further reading: The Future of Shopping: How AI Will Personalize Every Marketplace

Infrastructure layer

Lastly, the infrastructure layer. It forms the foundation of the AI tech stack, providing the necessary computational resources, storage solutions, and deployment mechanisms to support AI operations. This layer ensures that AI models can be effectively trained, deployed, and maintained at scale. Quite often, the infrastructure layer consists of:

- Computational resources. High-performance hardware such as GPUs, TPUs, and cloud-based computing services (e.g., AWS, Google Cloud, Azure) that facilitate intensive AI computations.

- Data storage. Scalable storage solutions like data lakes, databases, and distributed file systems (e.g., Hadoop, Amazon S3) that manage large volumes of data required for training and inference.

- Deployment platforms. Tools and platforms like Kubernetes, Docker, and TensorFlow Serving that enable the deployment of AI models as scalable services.

- Monitoring and management. Systems such as Prometheus, Grafana, and MLflow that monitor the performance, health, and lifecycle of deployed models.

In the aftermath, the infrastructure layer provides demand-based AI operations scaling, allowing the platform to use resources more efficiently. Besides, it ensures that AI models and applications are reliable and available to users 24/7, regardless of demand and various issues.

Moreover, this layer features keen security practices to protect data and models from unauthorized access and makes sure that everything complies with various standards and regulations.

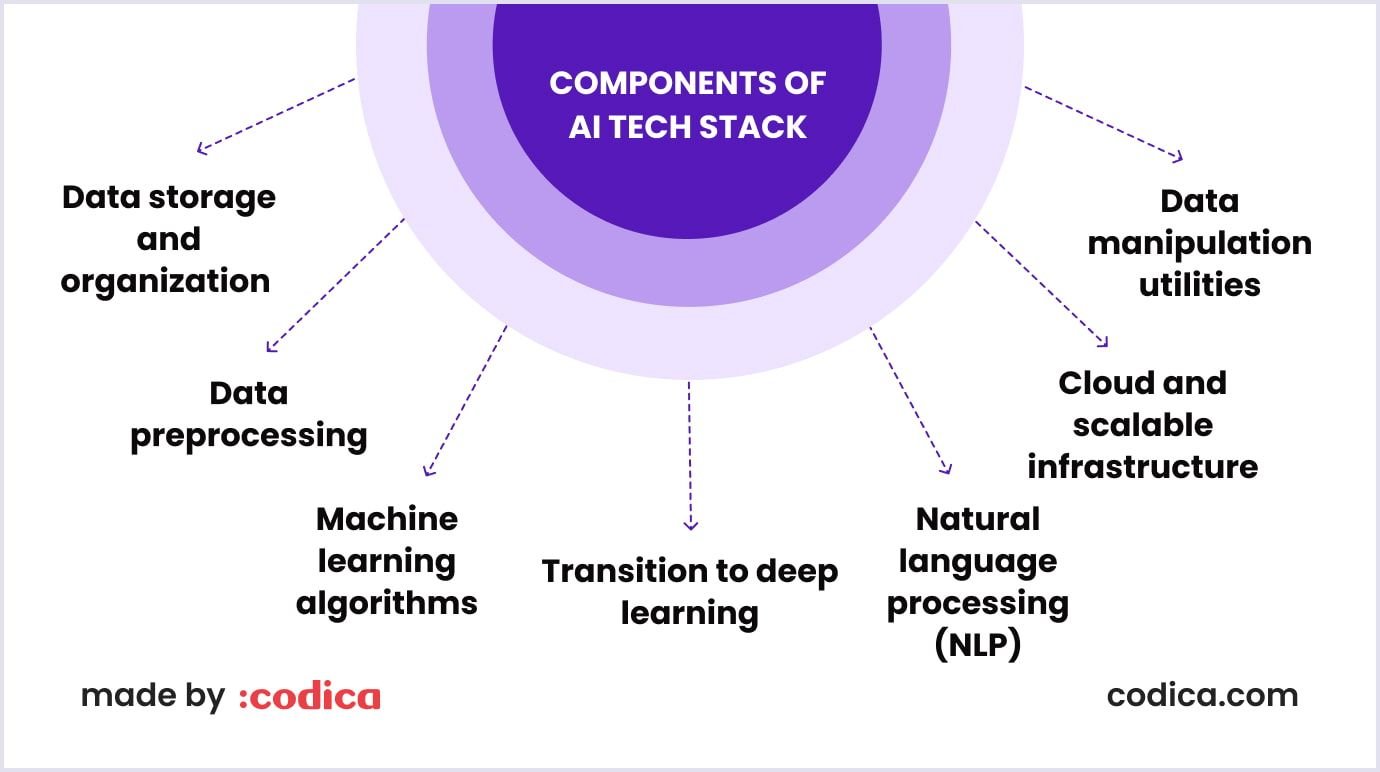

Components of AI tech stack and their relevance

Data storage and organization

Effective data storage and organization are fundamental to AI development, ensuring that large volumes of data are readily accessible and efficiently processed. Key technologies include:

- SQL databases. For structured data with fixed schemas, such as MySQL, PostgreSQL, and Oracle. Ideal for transactional data and complex queries.

- NoSQL databases. For unstructured or semi-structured data with flexible schemas, such as MongoDB, Cassandra, and Couchbase. Suitable for large-scale, high-velocity data.

- Big data solutions. Technologies like Hadoop HDFS and Spark manage vast amounts of data across distributed environments. Hadoop HDFS provides scalable, reliable storage, while Spark offers fast data processing, essential for big data analytics and AI applications.

Data preprocessing and feature recognition

The data you collect is raw and often difficult to work with. Hence, data preprocessing and feature recognition are crucial steps in preparing data for machine learning. These processes enhance data quality and ensure that models receive relevant and clean data. The tools to simplify this task include:

- Scikit-learn. A Python library offering tools for data preprocessing, including normalization, encoding, and splitting datasets. It also provides algorithms for feature selection and extraction.

- Pandas. Being a solid data management library built on Python, Pandas is a reliable tool often used to transform, analyze, and clean data. It is especially powerful when it comes to working with large arrays of data and complex operations.

- Principal Component Analysis (PCA). This dimensionality reduction technique is used to compress high-dimensional data into its lower-dimensional form, keeping all the crucial information. In simpler terms, with PCA, you can greatly simplify models and bring improvements to their performance.

Machine learning algorithms

Obviously, machine learning algorithms form the backbone of AI models, enabling them to learn patterns and make predictions based on data. They work thanks to algorithms, and these ones are the most fundamental:

- k-means clustering. This widely popular unsupervised learning algorithm partitions data into k distinct clusters based on feature similarity. It is commonly used for exploratory data analysis and segmentation.

- Support Vector Machines (SVMs). This supervised learning algorithm is a perfect tool for regression and classification work. SVMs are effective in high-dimensional spaces and for cases where the number of dimensions exceeds the number of samples.

- Random forest. It is an ensemble learning method. Essentially, it unites several decision trees to reduce overfitting and improve the overall computational accuracy.

Read also: Artificial Intelligence Examples: How Alibaba, Amazon, and Others Use AI

Transition to deep learning

Essentially, deep learning is a solid part of AI development due to its capabilities to develop complex models and vast amounts of data handling. As other aspects, deep learning works thanks to various tools, and in your AI development, you might want to use these:

- TensorFlow. Being built by Google, this deep learning framework is highly flexible and scalable, which makes it super suitable for both building and deploying deep learning models.

- PyTorch. A popular deep learning framework developed by Facebook, favored for its ease of use and dynamic computation graph. It is a common choice when there’s a need for development and research.

- Keras. This neural network is accessible via API and is typically run on top of TensorFlow and other frameworks. It features a simplified interface that is perfect for developing deep-learning models.

- Convolutional Neural Networks (CNNs). These specialized neural networks are designed specifically to work with grid-like data, like images, for instance. For this reason, CNNs are ideal for object detection and image recognition tasks.

- Recurrent Neural Networks (RNNs). Unlike CNNs, RNNs are made to process sequential data, like natural language, for instance. Therefore, they are more than suitable for speech recognition, language modeling, and more.

Natural language processing (NLP)

This subfield of AI is one of the most common in AI development. It provides functionality to let AI read and generate text. Therefore, technologies like chatbots, sentiment analysis, and language translation all revolve around NLP. Here are a few NLP-focused tools:

- NLTK (Natural Language Toolkit). An extensive library for creating NLP-powered products. It offers all the tools you may need, including tokenization and text processing.

- spaCy. This user-friendly NLP library is created specifically to process tons of text, which other tools may struggle with. This library features dozens of pre-trained NLP-focused models for everyone to use.

- GPT-4. The world most popular go-to choice, GPT-4 s developed by OpenAI and features a lot! It is virtually perfect for creating text and performing complex language-understanding tasks.

- BERT (Bidirectional Encoder Representations from Transformers). BERT is a pre-trained transformer model by Google. It is created to contextually understand separate words in a text. After all, BERT can perfectly answer general questions and classify text based on your input.

Computer vision

Next, let’s cover computer vision, which, similar to NLP, provides functionality to comprehend information from the world. Unlike NLP, though, computer vision focuses on the visual aspect of things, being perfect for object detection, video analysis and image recognition.

- OpenCV (Open Source Computer Vision Library). A widely-used library for real-time computer vision, offering everything mentioned earlier, from image processing to machine learning based on visual data.

- Convolutional Neural Networks (CNNs). As mentioned earlier, CNNs are essential for computer vision tasks, providing the capability to automatically learn and extract features from images.

Robotics and autonomous systems

Robotics and autonomous systems integrate AI with physical machines, enabling them to perform tasks autonomously and interact with their environment.

- Sensor fusion. This process involves composing data from several sensors for more prcesice and reliable information. It is an irreplaceable tool when it comes for managing AI in robotics niche and autonomous vehicles.

- SLAM (Simultaneous Localization and Mapping). A technique used to construct or update a map of an unknown environment while simultaneously keeping track of an agent's location. SLAM is vital for autonomous navigation in robotics.

- Monte Carlo Tree Search (MCTS). A heuristic search algorithm used for decision-making processes in robotics and game-playing AI. MCTS is known for its application in strategic planning and optimization.

Cloud and scalable infrastructure

Cloud and scalable infrastructure provide the necessary computational power and storage capabilities to support large-scale AI applications, enabling flexibility and efficiency.

- AWS (Amazon Web Services). A leading cloud service provider offering a wide range of AI and machine learning services, including EC2 for computing, S3 for storage, and SageMaker for model development.

- Google Cloud. Provides robust AI and machine learning services, such as Google AI Platform, BigQuery for data analytics, and TensorFlow Extended for end-to-end machine learning pipelines.

- Azure. Microsoft's cloud platform offering AI and machine learning services, including Azure Machine Learning, Cognitive Services, and Databricks for big data processing.

Data manipulation utilities

Data manipulation utilities are crucial for processing and analyzing large datasets, which are fundamental to training robust AI models. These technologies enable efficient data handling, transformation, and analysis, facilitating the development of high-performance AI systems.

- Apache Spark. An open-source unified analytics engine, Spark is designed for large-scale data processing. It provides an in-memory computing framework that enhances the speed and efficiency of data processing tasks. Spark supports a variety of programming languages, including Python, Scala, and Java, and is widely used for big data analytics and machine learning.

- Apache Hadoop. This framework focuses on large datasets processing and distributing storage. It Hadoop uses a distributed file system (HDFS) and a processing model called MapReduce. It enables the handling of vast amounts of data across clusters of computers, making it a key component in big data ecosystems. Hadoop is commonly used in conjunction with other data processing and machine learning tools to build scalable AI solutions.

Two essential phases of the modern AI tech stack

As you already know, working with AI system can be tough. Hence, a methodical approach is what simplifies this process, allowing you to efficiently build, deploy, and scale your AI solutions.

This framework, structured into phases, addresses various aspects of the AI life cycle, including data management, transformation, and machine learning. Each phase is crucial, involving specific tools and methodologies. Let's explore these phases to understand their importance and the tools involved.

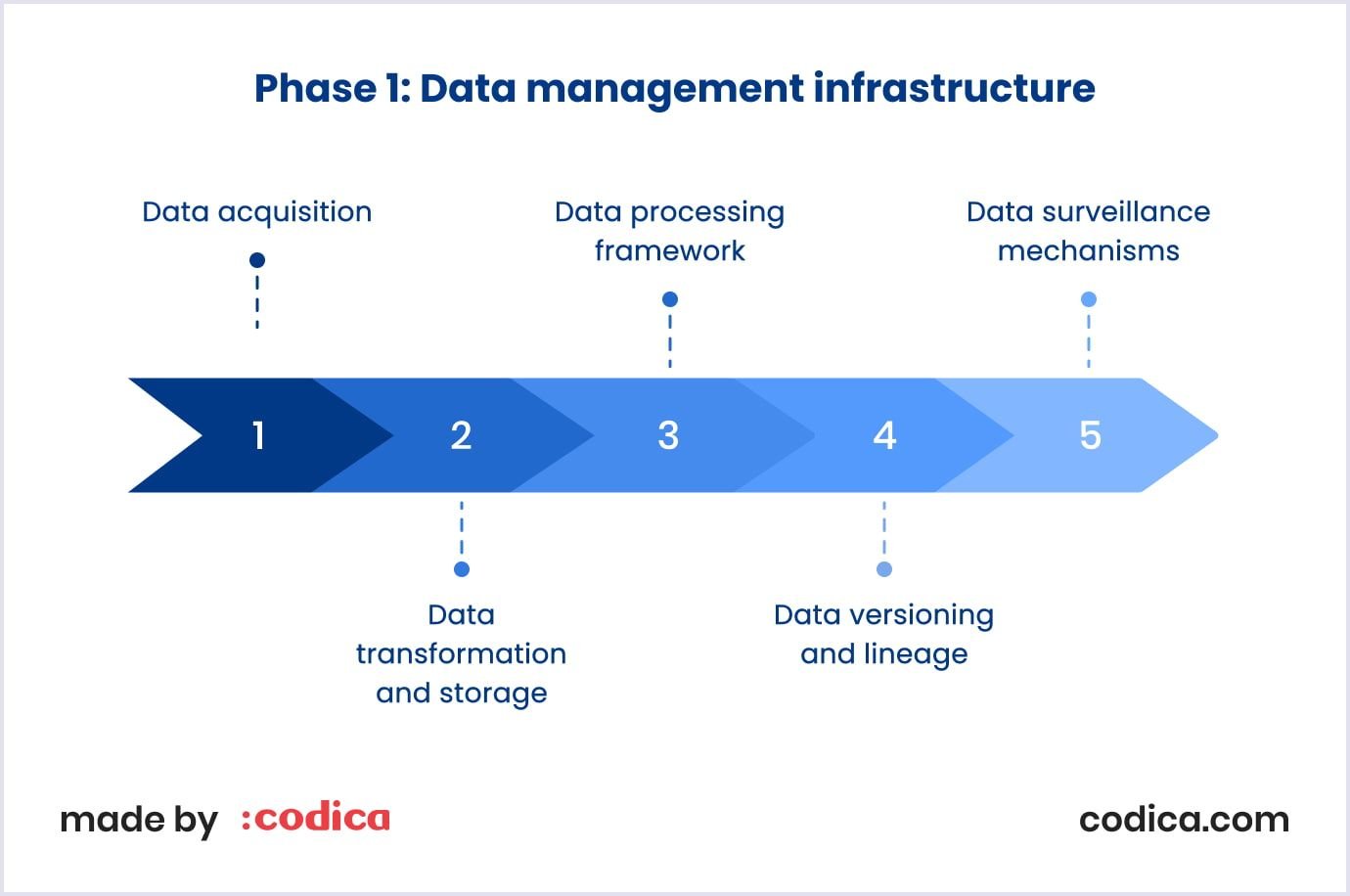

Phase 1: Data management infrastructure

Data is the core of AI capabilities, and its effective handling is paramount. To work with it properly, this phase involves various stages to collect, structurize, store, and process data, making it ready for analysis and model training.

Stage 1: Data acquisition

This stage revolves around gathering the data needed for AI. It utilizes tools like Amazon S3, and Google Cloud Storage to create an actionable dataset. Next, it would be handy to label data for supervised machine learning. Various tools can automate this process, yet strict manual verification is also necessary.

Stage 2: Data transformation and storage

Once you have all the needed data, use Extract, Transform, Load (ETL) for refining data before storage or Extract, Load, Transform (ELT) for transforming data after storage. Reverse ETL synchronizes data storage with end-user interfaces.

Next, that data is stored in data lakes/warehouses depending on whether it is structured or not. In this matter, Google Cloud and Azure Cloud offer extensive storage solutions which makes them a super popular choice.

Stage 3: Data processing framework

At this stage, your data is ready to work with. It gets processed into a consumable format using libraries like NumPy and Pandas. Besides, Apache Spark mentioned earlier can greatly help in managing this data.

Additionally, feature stores like Iguazio, Tecton, and Feast can be used for effective feature management, enhancing the robustness of machine learning pipelines.

Stage 4: Data versioning and lineage

The data you work with should be versioned and this can be done with DVC (data version control) and Git. For tracking data lineage, in turn, Pachyderm might be helpful. After all, both tools ensure repeatability and provide a comprehensive data history.

Stage 5: Data surveillance mechanisms

After your product is online, it needs regular attention and maintenance. Solutions like Prometheus and Grafana can be of great help in monitoring the performance and health of deployed models.

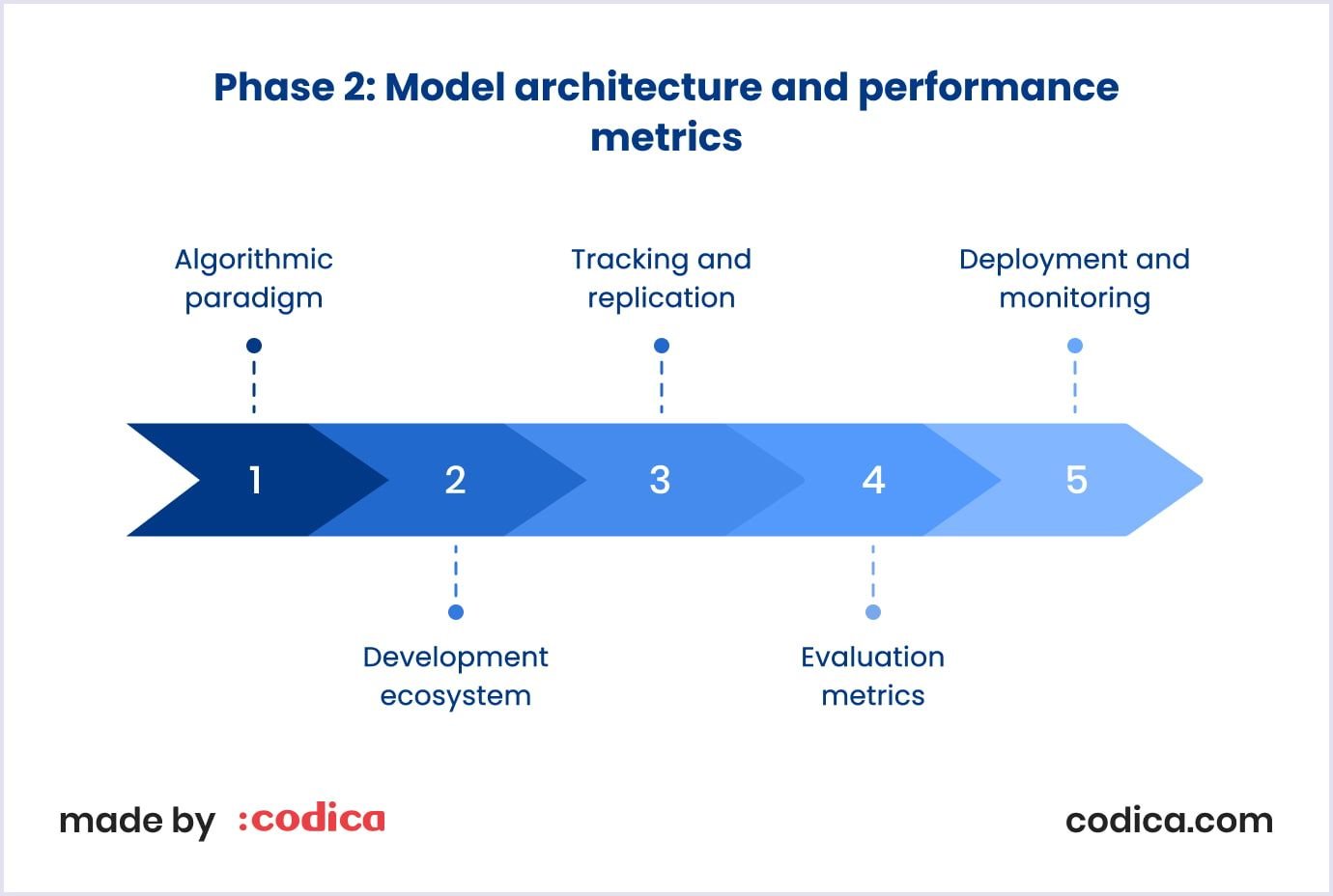

Phase 2: Model architecture and performance metrics

Modeling in AI and machine learning is a continuous and challenging process. It involves considering computational limits, operational requirements, and data security, not just algorithm selection. Here are some aspects worth checking out after you conclude the first phase.

Stage 1: Algorithmic paradigm

Machine learning libraries like TensorFlow, PyTorch, scikit-learn, and MXNET each have unique advantages, including computational speed, versatility, ease of use, or wide community support. Hence, choose the library that fits your project and shift focus to model selection, iterative experimentation, and parameter tuning.

Stage 2: Development ecosystem

Regarding the ecosystem you will work in, there are several choices. First and foremost, you will need to choose an integrated development environment (IDE) to streamline AI development. They offer tons of functionality for editing, debugging, and compiling code to complete tasks effectively.

Visual Studio Code, or VS Code in short, is a super versatile code editor that you can easily integrate with tools like Node.js and many others mentioned previously. Additionally, take note of Jupyter and Spyder, as they are particularly helpful for prototyping.

Stage 3: Tracking and replication

When working with machine learning, repeated QA services are practically obligatory. Therefore, tools like MLFlow, Neptune, and Weights & Biases simplify experiment tracking. Layer manages all project metadata on a single platform, fostering a collaborative and scalable environment essential for robust machine learning initiatives.

Stage 4: Evaluation metrics

Performance evaluation in machine learning involves comparing numerous trial outcomes and data categories. Tools like Comet, Evidently AI, and Censius automate this monitoring, allowing data scientists to focus on key objectives. These systems offer standard and customizable metrics for both basic and advanced use cases, identifying issues such as data quality degradation or model deviations, which are crucial for root cause analysis.

Codica expertise

At Codica, the extensive expertise our professionals have allows us to deliver AI development services featuring a wide variety of functionality and benefits. To name a few, we deliver:

- Comprehensive AI strategy and consulting. We create tailored AI strategies to meet specific business needs and offer consulting services to help organizations integrate AI smoothly and effectively.

- Custom AI solutions development. We develop custom AI solutions to address unique business challenges, ensuring they are scalable, reliable, and aligned with client objectives from concept to deployment.

- Data management and preprocessing. Our services include data collection, cleaning, annotation, and transformation, ensuring high-quality data for AI projects. We handle both structured and unstructured data to provide a solid foundation.

- Machine learning and deep learning expertise. We use advanced frameworks mentioned earlier, namely TensorFlow, PyTorch, and others, to build next-level AI models for predictive analytics, NLP, and computer vision, delivering high-performance solutions.

- AI model training and optimization. We provide comprehensive model training and optimization, employing techniques for hyperparameter tuning and iterative testing to ensure accurate and efficient AI models.

- Deployment and integration. We offer end-to-end AI solution deployment and integration services, ensuring seamless integration into existing systems and workflows for scalable and maintainable solutions.

After all, introducing AI to your business, is a highly beneficial venture. If chosen properly, AI solutions deliver immediate ROI by providing precise data insights and enabling automation and personalization for your app or website. Depending on which AI you use, the benefits vary.

Some integrations can analyze customer preferences and behaviors, helping you engage current customers and attract new ones, while others can identify patterns in historical data to predict future revenue outcomes for your product.

After all, a superior level of automation and data management AI provides can help you make more informed decisions on further business growth. Besides, from a customer perspective, it is a superior addition as well because of its versatility and ability to help with a wide variety of tasks.

Conclusion

To wrap things up, working with AI nowadays is a double edged sword. On the one side, as you can see, a lot of tools and technologies are involved in order to make things work right. On the other hand, though, this task is not difficult as it used to be thanks to a plethora of tools available on the market.

Therefore, choosing the right set of tools for the job is a vital process even before the development starts. Yet, it is absolutely normal to struggle with choice as many bottlenecks might not be visible at first glance. If that’s the case, feel free to contact us because we provide expert services to deliver AI-powered solutions at Codica.

With dozens of examples in our portfolio, you can even find a project we worked on previously similar to yours, ensuring that we know how to deliver your specific platform or solution.