As artificial intelligence (AI) is used increasingly, it also poses certain risks that need to be addressed. Responsible AI implies a set of techniques that ensure AI usage is safe and preserves human values and rights.

There are specific regulations that lay the ground for responsible AI. Though they are the first steps, such regulations and frameworks already promote future improvements in responsible AI development.

In this comprehensive guide, we discuss what responsible AI is, its considerations, best practices, examples, and more. They will help you understand how you can improve your AI system. Let’s dive into it.

Responsible AI definition

What is responsible AI? The term means that AI systems must be unbiased, transparent, and accountable as they process data, including sensitive information.

The topic of responsible AI has not been solved yet, it is under development. Many organizations, companies, and businesses are working on corporate and local regulations to make AI not only effective but also ethical.

Why is it essential that AI is safe and responsible? It’s important in brand-customer relationships. Users value the benefits AI provides, but they also value security in their lives. On the other hand, brands must provide trustworthy services to be valued by customers and have an opportunity to grow.

Let’s see what aspects are covered by current regulations and how you should implement responsible AI into your solution to keep it ethical and safe.

You may also like: Building an AI System From Scratch: Steps, Tips, and Tools

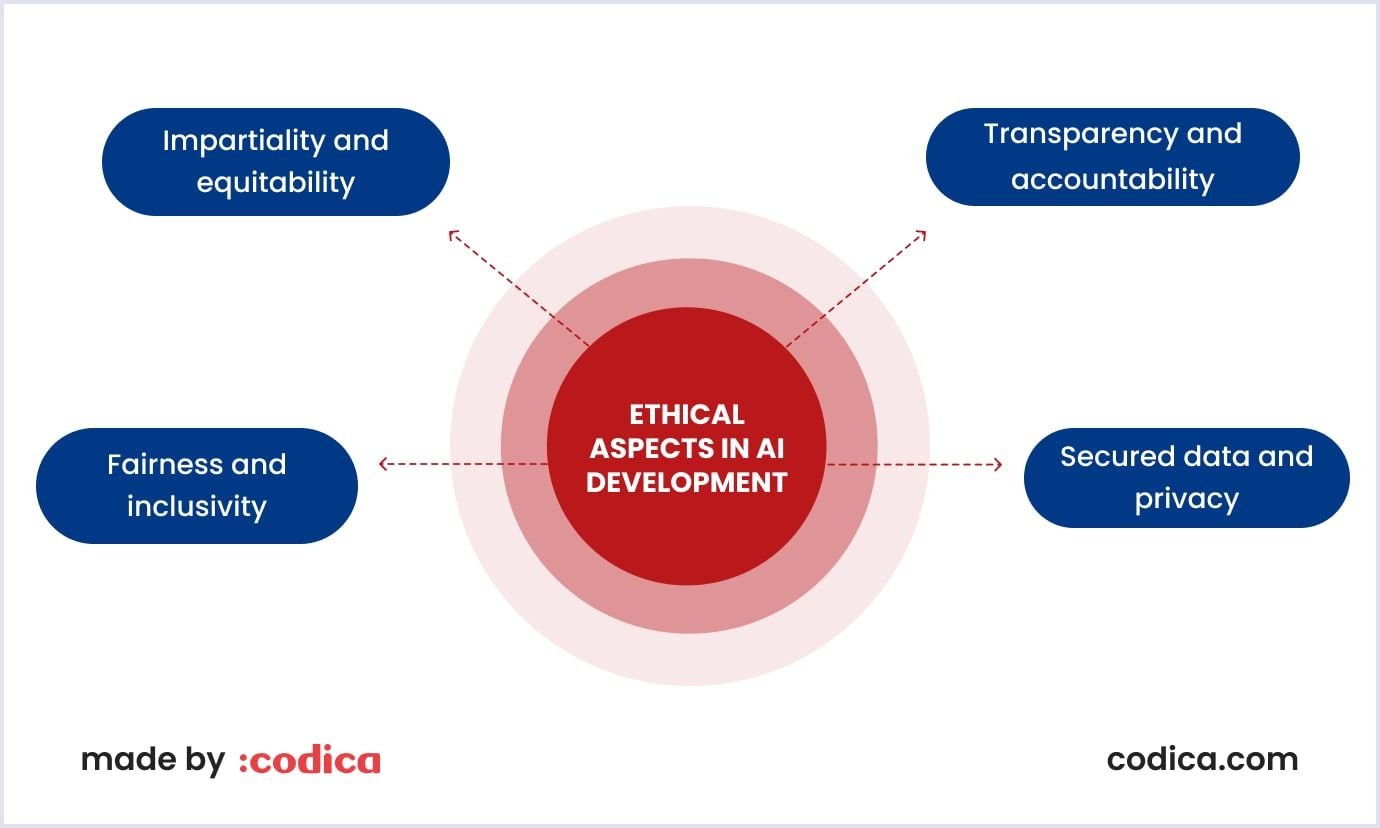

Ethical considerations in AI development

Before we discuss the best practices for responsible artificial intelligence development, let’s examine the ethical principles of AI. They form the foundation for technical and legal developments on responsible AI.

Ensuring fairness and inclusivity. When you train an AI system, it bases its output on the data you feed it. That is why make sure that your training data are inclusive and unbiased. This is especially vital for hiring processes, lending, and law enforcement.

That is why you should carefully audit your data before inputting it into the AI system. Also, monitor and update your AI systems to keep them fair over time.

Impartiality and equitability. There is a possibility of bias in training data sets for AI models. Thus, standards provide that AI systems should treat humans equitably. So, the data collected must be diverse and inclusive and undergo regular audits for responsible AI solutions.

Algorithmic clarity and accountability. Companies must provide data on how their AI algorithms work with data. Algorithmic transparency is especially important in finance, healthcare, and criminal justice. These domains are crucial as they influence people’s lives directly. Audits and third-party reviews help ensure that AI algorithms are transparent and compliant.

Securing data and privacy. AI systems are often implemented in solutions that handle large portions of data, including sensitive information. Therefore, it is vital to apply security tools and data protection techniques. They cover encryption, anonymization, and access controls. Also, you must adhere to the state and local standards on data privacy.

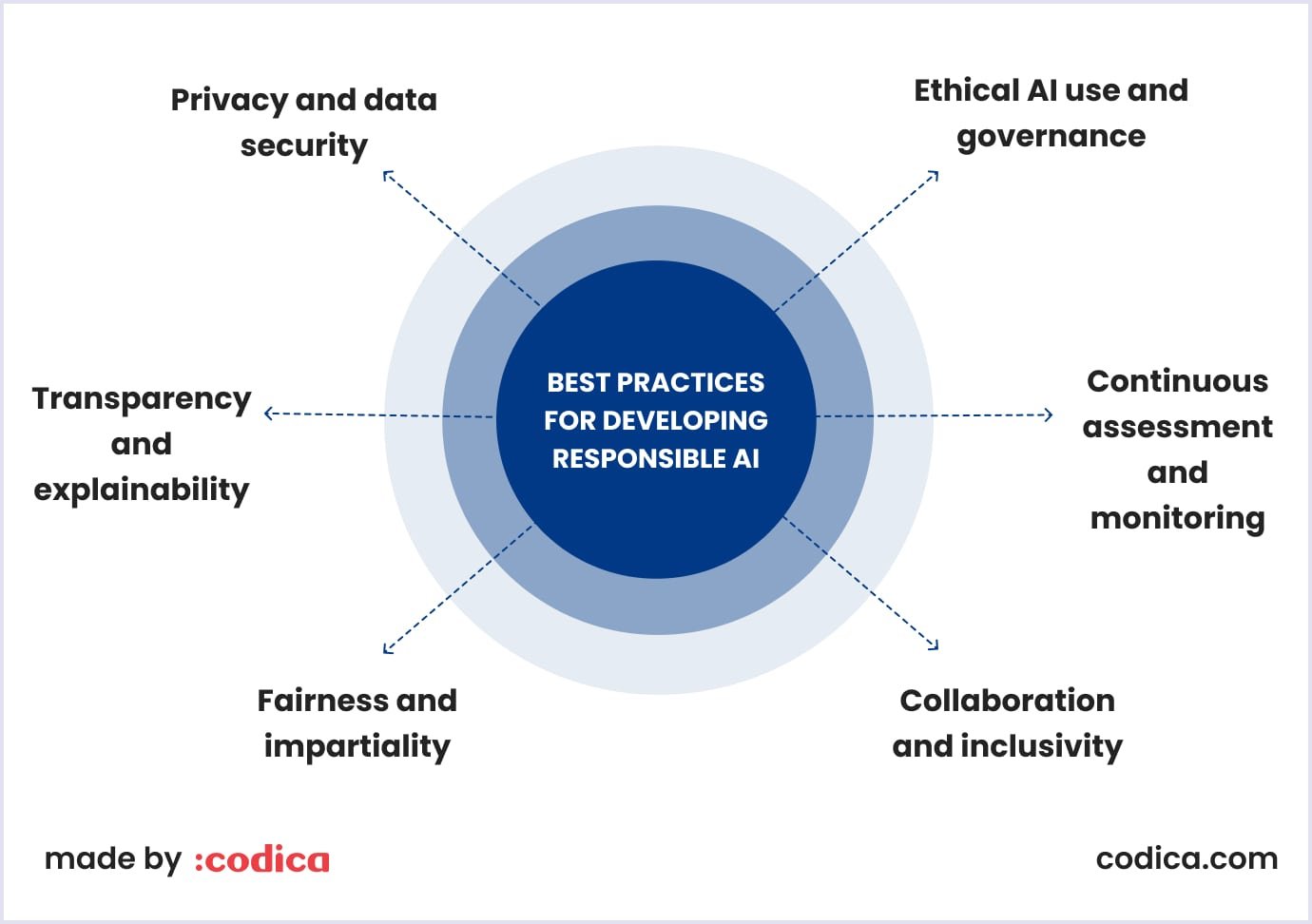

Best practices for developing responsible AI

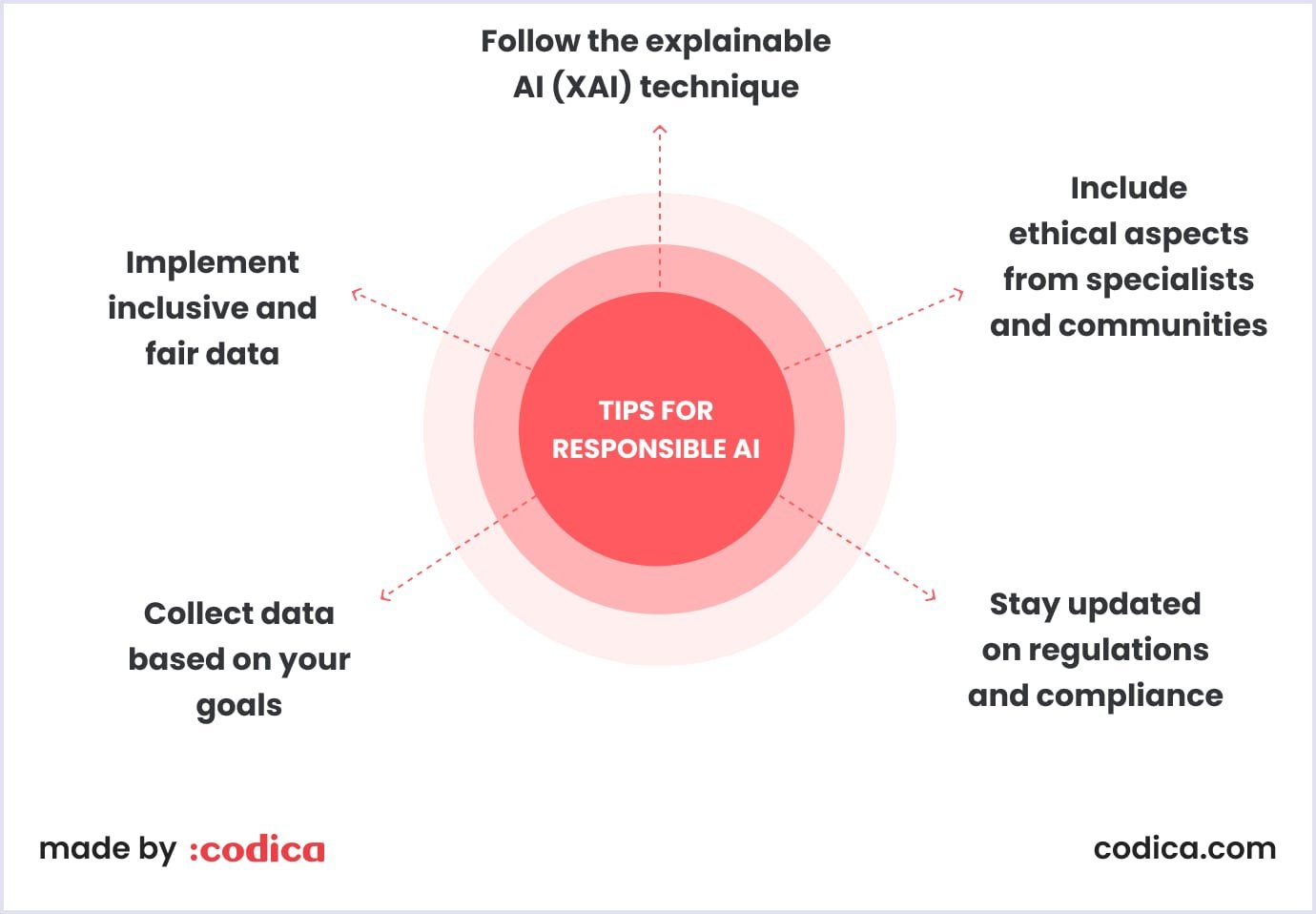

Now that we have learned the basic principles of responsible artificial intelligence let’s discuss the actual steps to implement it.

Fairness and exclusion of biases. Companies should use AI datasets that include the interests of various groups of people. Also, they should regularly audit their AI data and make them relevant and unbiased.

Transparency and explainability. Responsible AI solutions should be designed in a way that stakeholders and other organizations understand how they work. A company should use techniques that show when and how the data has been collected and how the AI system provides outputs.

Privacy and data security. Your AI system must be encrypted and anonymized to protect user data. Following data protection standards, such as GDPR and CCPA, you protect your customers’ data and ensure they will use your product again.

Ethical AI use and governance. This approach means that your company should establish guidelines that can be controlled by stakeholders and oversight organizations. For example, oversight committees and stakeholders should be able to check your ethical guidelines if necessary.

Continuous assessment and monitoring. An AI model needs constant monitoring and regular updates. Keeping your AI model relevant ensures that it follows ethical standards and is secure for your customers.

Collaboration and inclusivity. For the best results, an AI system must be developed and supported by different parties. Stakeholders, developers, ethic experts, users, and other specialists provide different perspectives and contribute to AI systems' versatility.

Regulatory and legal aspects

Gartner predicts that 50% of governments will reinforce ethical use of AI by 2026. These include the following principles.

Data privacy and protection

Modern AI systems are built following regulatory standards on data protection. For example, the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States provide strict guidelines. They ensure the safety of data collection, processing, and storage as responsible AI principles.

Intellectual property (IP) and AI

As for now, there is a lack of legislation protecting IP when AI has been used and who the rights holder is. The root problem is that AI is trained and then uses human-generated content. So, potentially, AI-generated pieces may contain copyrighted works. Brands should keep that in mind and understand that they should present the right to use AI-generated content for their marketing or product design.

Safety and reliability standards

AI systems are used in critical devices, such as self-driving vehicles and healthcare equipment. So, now companies and governments discuss how the relevant safety standards can be improved.

Examples of legal regulation of AI in different countries

Most countries provide national guidelines for AI. At the same time, the European Union and Brazil are building their regulations on the risk-based approach. They often use clinical trials and published scientific research.

For example, the European Union adopted the first regulation on AI as the EU AI Act. It regulates the transparency and ethical principles of AI. It also outlines how AI should be used in manufacturing, criminal cases, copywriting, and other critical domains.

On the other hand, the US introduced sectoral guidelines, such as regulations for self-driving vehicles. Canada and Australia have set regulations to ensure that AI works according ethical standards.

Responsible AI examples in different industries

As we have discussed the basics and regulations, let’s look at several responsible AI examples with successful implementation.

FICO Score is a system that assesses credit risks based on AI algorithms. It uses scoring algorithms and predictive analytics in mortgage, auto, bank cards, and consumer lending. Thanks to regular audits and optimization practices, the FICO Score provides comprehensive and reliable results with responsible AI practices.

IBM’s watsonx Orchestrate is an HR system that helps with unbiased talent acquisition. The platform promotes fairness and inclusivity by using various pools of candidates. Thus, it helps managers to select candidates fairly.

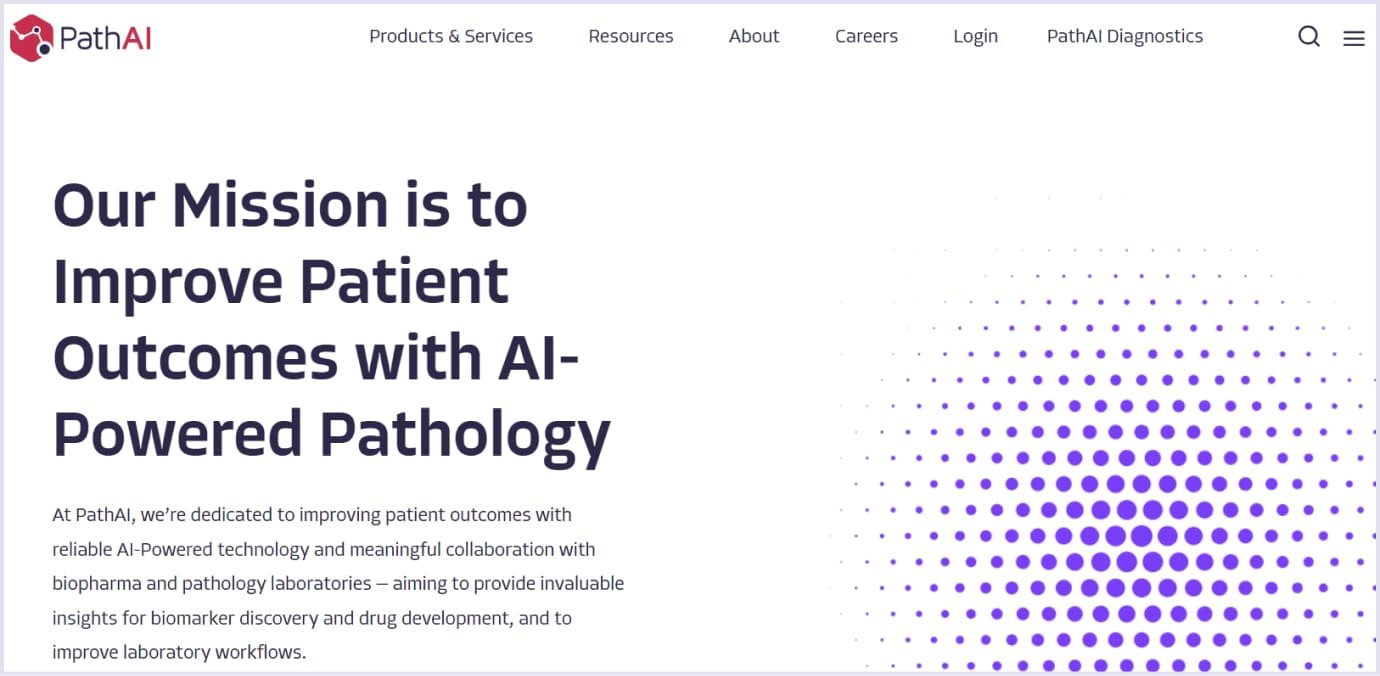

PathAI is an AI-powered tool that helps pathologists to diagnose diseases. The solution’s AI system is reviewed based on rigorous clinical trials and peer-to-peer reviews.

Ada Health, an AI-powered chatbot, helps you assess a disease. The platform boasts over 13 million users globally and over 34 million symptom assessments. To make AI responsible, the company secures data, implements ethical principles, performs thorough tests, and ensures regular audits.

Planet Labs, an Earth imaging company, uses AI in satellite imagery. The company encrypts data and uses transparent methods for data processing and storage. Thanks to these methods, Planet Labs helps us monitor the environment, climate, and agricultural yields.

Challenges in implementing responsible AI

As AI brings new opportunities, challenges, and risks, companies must also introduce AI into their products and processes. They also must follow the relevant ethical standards and ensure compliance. Let’s see the top challenges in implementing AI and how you can overcome them.

Data privacy and security. Companies implementing AI must maintain and follow current security standards to protect customer information. This involves creating encryption and anonymization, as well as preventing unauthorized access to accounts.

To ensure data security and pricy, avoid data collecting for the sake of it. Instead, focus on what is necessary for your AI goals. Also, AI systems should be created with “privacy in design.” It means that your AI system sould be secured from the start of development.

Bias and fairness. AI systems are trained on human-generated data that may contain prejudice. When you introduce AI, it can augment biases that have been present in input datasets.

Making AI data unbiased requires continuous training and auditing. It’s necessary to question your algorithms and involve different parties and specialists for comprehensive perspectives.

Algorithm explainability. AI systems must use algorithms that are clear and explainable. Such algorithms should be understandable for stakeholders and oversight organizations.

Your system must be explainable, otherwise it poses significant penalty and liability risks for you. Use XAI or explainable AI. It applies techniques to trace each decision made during the machine-learning process. XAI helps you maintain your system transparent and protects you against risks of unexpected penalties.

Ethical considerations. These involve promoting inclusivity, avoiding harm, and aligning information with societal values. Such aspects pose challenges as AI technology constantly evolves, and there are no universal ethical standards.

Include perspectives in your AI to make it ethical. Stakeholders, regulators, and relevant communities bring their unique views. Thus, include them in your AI system and update them over time.

Regulatory compliance. As AI regulations are issued in different countries, there may be contradictory statements. That is why organizations and companies should invest in legal and compliance expertise, which requires significant investments.

Stay informed of the new legal requirements in the AI domain. You also can engage legal and compliance experts who can help you with necessary regulatory updates.

The future of responsible AI

As Accenture’s survey shows, 95% of global executives consider that AI is crucial for their organization’s processes. So, we need well-thought-out practices to make AI reliable and transparent.

AI technology evolves and will rely more on ethical practices. Current research focuses on making AI technically advanced and socially beneficial. Initiatives in responsible AI practices also promote transparency and explainability for decision-making processes.

For example, Google’s recommendations on responsible AI development outline the importance of human-centered design, raw data examination, AI testing, and other techniques.

We expect that robust frameworks on responsible AI will appear. They will be based on multi-disciplinary inputs, and promote human values and societal norms.

How Codica can help you with responsible AI

Implementing responsible AI requires solid technical foundations and experience. When providing AI development services, we rely on the latest standards in tech. So, AI solution inclusivity and transparency are our top priorities. This will make your product compliant and beneficial to your customers.

We provide AIaaS services and create automation solutions, NLP systems, neural networks, expert systems, and more. Our team works with the insurance, healthcare, automotive, media, and rental industries.

From the product discovery and through the whole development process, we collaborate with you on your project. If necessary, we adjust requirements and make suggestions for you based on the latest tech standards. Thus, we complete the project on your budget and within the set timeframe.

To wrap up

Responsible AI is based on the principles that promote human values and safety. Currently, organizations and companies work on comprehensive regulations outlining the norms for responsible AI. So, responsible AI can be used globally beyond borders.

If you have a project that needs complaint and inclusive AI, we are here to help. Contact us to discuss the details and get a free quote.